First, let’s start with a question: what if Google has access to all of your web pages and shows your personal data on the search engine result page?

Uhh, it’s a horror and unbelievable thing that would happen by Google, but don’t worry, they won’t do it without your permission.

But what is the permission for Google and Major Search Engines to access certain files on your website?

It’s Robots.txt. Remember the name; it’s not a robot.text.

Every active website has a robots.txt file that tells Google’s crawler that. These pages should be crawled and indexed, and those pages should not be crawled and indexed.

I will give you an example to help you understand better, but first, let’s understand the theory of the robots.txt file.

What is a Robots.txt file?

Robots.txt is a file that tells the search engine to access or not access certain pages that they should crawl and index or not.

It helps to prevent unauthorized access from search engines, and your data will be 100% safe.

It’s a .txt file that uploads to the root folder of a website, and here, we write some commands for search engines.

Example;

User-agent: *

Disallow: /checkout/

# Allow crawling of product pages and categories

Allow: /products/

Allow: /categories/It’s a sample of a robots.txt file for any website, but it has a certain format to use properly for an eCommerce website and a blog site.

Using a proper robots.txt file helps to reduce the crawling budget and makes faster indexing for your website.

I will explain how you can easily use a proper robots.txt file for your eCommerce website or blog site.

Using no robots.txt file in your site isn’t an issue to crawl your site for Google, but using the wrong formatted robots.txt may cause de-index and stop crawling by Google.

New Robots.txt Report in Google Search Console

Google has updated its robots.txt file tester and enhanced the features and usability for users.

It’s now a more convenient and more effective way to use inside Google Search Console. Let’s move on to the main topic so that you can understand what’s the main update and how it helps to save almost 10 to 20 bucks per month.

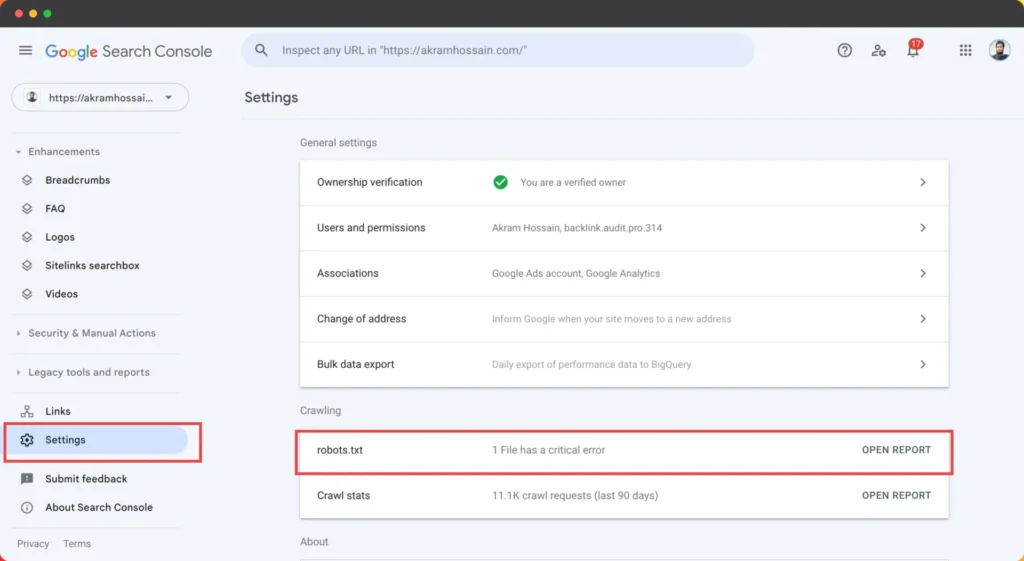

Google added a robots.txt report in the Search Console Setting>Crawling section.

Click on “Open Report”, and it will show up to 20 robots.txt files data reports here.

Now, Google allows us to see the last 30 days’ versions of robots.txt files so that you can measure and retrieve mistakenly deleted files.

Here are the features of the new robots.txt report:

- File: You can see the property name and parent domains here, as well as all of your robots.txt files for HTTPS/HTTP/WWW/non-WWW and different versions of this file.

- Checked on: When Google has completed the last crawl, you will always have the latest time.

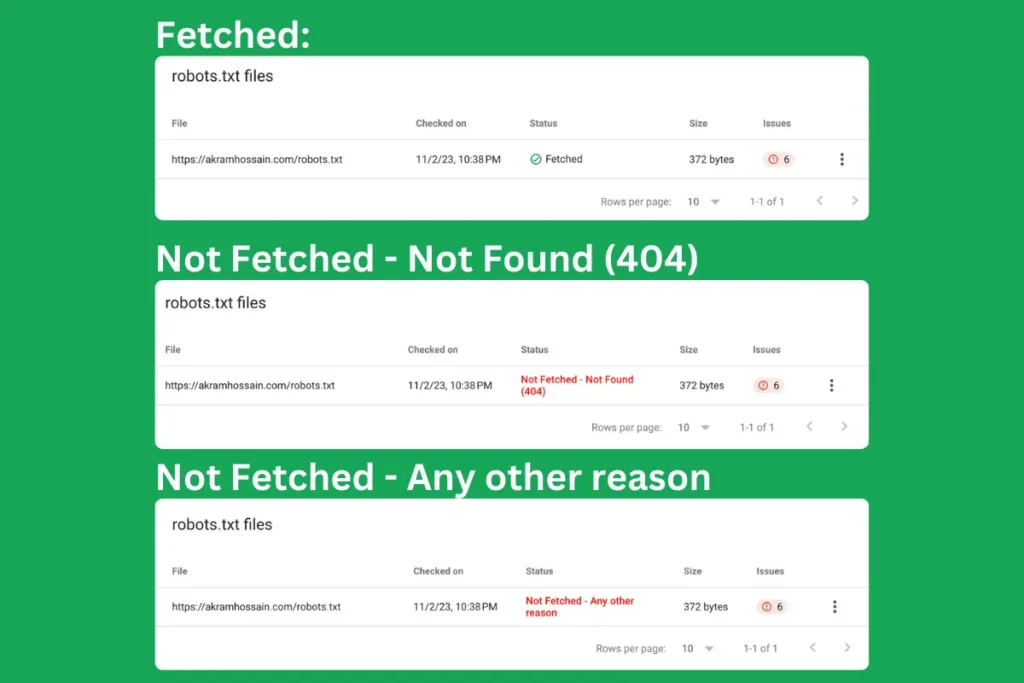

- Status: You will see the different types of status, like Fetched, which is technically okay for this file. Not Fetched Not Found (404), and Not Fetched – Any other reason.

- Size: Total size of your site’s robots.txt file.

- Issues: Google has discovered some issues.

Request a recrawl

It’s also an essential and effective feature whether you bring changes in your robots.txt file and want to make it index as fast as possible. It may help to re-crawl your new files.

But remember, you should not overuse it without limit; if you know Google regularly crawls your site, then no need to use it for no reason.

It’s this new robots.txt report helpful?

Compared to the previous report features, it’s much more advanced and allows you to recrawl and see the current and previous file errors in a single dashboard. You might have seen these insights with any paid tools.

But now it simply saves your time and money. So, I think it’s helpful.

How to Use Robots.txt File Properly?

It’s not much more complicated, and there is no rocket science to understand its mechanism.

It’s a simple file, and you can generate it with any WordPress plugin like Yoast SEO, Rank Math, or All In One SEO plugins.

By default, you will get a simple structured robots.txt file that completes your initial fulfilment.

But according to your needs, you can change it and make it more professional to reduce the crawling budget and faster crawling & indexing.

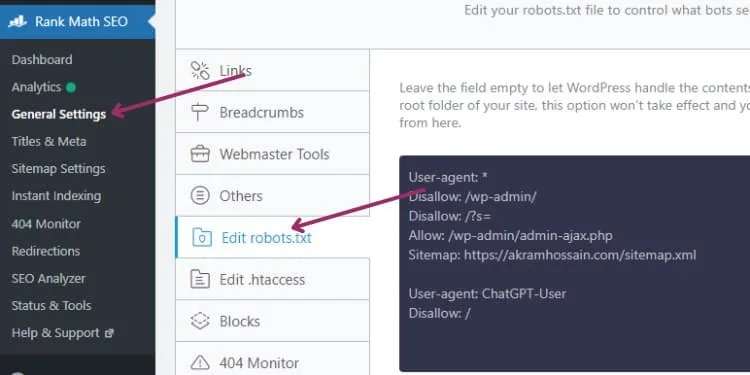

If you use WordPress CMS to manage your website, you can easily generate this file using Rank Math or other SEO plugins; here, I’m using the Rank Math plugin to generate a robots.txt file.

Rank Math Robots.txt File Edit:

Go to “General Settings” and click on the “Edit robots.txt” file, and you will get the editing field.

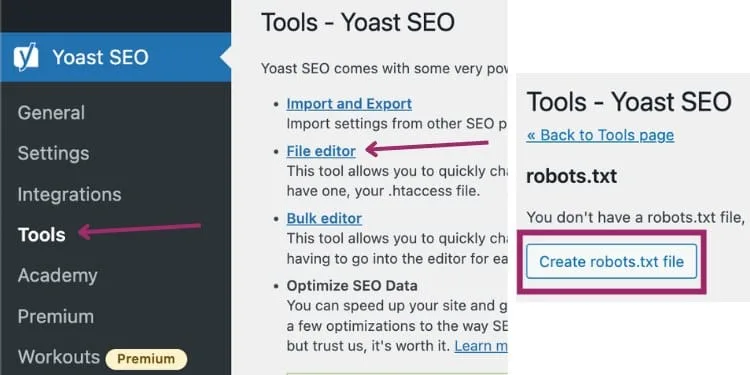

Yoast SEO Robots.txt File Edit:

Install and activate the Yoast SEO plugin and Go to “Tools“. You will get different types of editing options here.

Select “File Editor” and click the “Create robots.txt file” button to generate a basic file for your website.

You can read the full documentation of Yoast SEO robots.txt file create and edit.

Let’s have a look at a common robots.txt file;

User-agent: *

Disallow: /wp-admin/

Allow: /wp-admin/admin-ajax.php

Sitemap: https://akramhossain.com/sitemap_index.xml

User-agent: *

Disallow: /wp-content/uploads/wpo-plugins-tables-list.json

This is a common and simple way to tell search engines to index and non-index your website.

Even a blog, real estate, and other less-page sites should use this format for their website.

It can be changed in certain cases depending on the website’s conditions.

Let’s explore the above file;

Line – 1: User-agent: *

Here, we call all user agents like Google, Bing, Baidu, Yandex, Yahoo, AOL, and everything else online. Here, [*] defines the allowance for every user agent.

Line – 2: Disallow: /wp-admin/

Now, we disallowed Google and major search engine crawlers from crawling our [/wp-admin/] directory; we store our database, themes file, and other confidential information there.

Line – 3: Allow: /wp-admin/admin-ajax.php

Here, we allow our website’s front-end files like [/admin-ajax.php], and Google and search engine crawlers can easily access and index the web page.

Line – 5: Sitemap: https://akramhossain.com/sitemap_index.xml

Here, we define the whole XML sitemap of our website, and Google or search engines can easily understand the website’s structure and indexable links.

XML sitemap is a search engine crawler readable file where they easily get all of the website’s URLs and index.

Line – 5 isn’t required, but you can use it here.

And some things you can see in the 7 and 8 lines.

This is a simple and well-structured file for almost regular websites, but if you run an eCommerce website. The robots.txt structure should be different and appropriate.

Let’s have a look;

Robots.txt File for eCommerce Website

First of all, let’s have a look at how should we use robots.txt file for an eCommerce website;

User-agent: *

Disallow: /checkout/

Disallow: /cart/

Disallow: /account/

Disallow: /wishlist/

Disallow: /search/

Disallow: /login/

Disallow: /admin/

Disallow: /private/

Disallow: /restricted/

# Allow crawling of product pages and categories

Allow: /products/

Allow: /categories/

Here you can see everything is the same, and here are some differences for disallowed.

You can see 2,3,4,5,6,7,8,9,10 lines are disallowed for obvious reasons. Just look at that; checkout, cart, account, wishlist and similar confidential pages aren’t necessary to index.

These basic things should not be published publicly, even for search engines. But it can be the most harmful if you don’t command search engines not to crawl and index these URLs.

It will reduce your crawling budget and expose essential and confidential information.

Disclosure: First, consider your URL indexing: “Is it should be indexed or not.” If you place any unappreciated disallowed list here, it can de-index your whole website from Google.

Be careful before adding a single list here, and you can check the URL listing from the Rank Math Free Robots.txt tester.

The best practice is always checking a URL before adding an allowed or disallowed list.

Hope you understand the concept of robots.txt file, and you can use it for your website, whether it’s an eCommerce website or blog, news, magazine or other website.

Common Mistake of Robots.txt File

Every website is different, and they have their own page structure that they manage by themselves.

So ultimately, robots.txt file listing will be different from other sites, and you will get some different types of listing here like;

User-agent: *

Disallow: /checkout/

Disallow: /customer/

Disallow: /cart/

Disallow: /*index.scss

Disallow: /*reqwest/index

Disallow: /wangpu/

Disallow: /shop/*.html

Disallow: /catalog/

Disallow: /*from=

Disallow: /wow/gcp/

Disallow: /wow/camp/Look at Line – 5, Line – 6, Line – 8 and Line – 10; you can see the extra [*] before or after [/].

[*] means the URL isn’t specific here, and it can be multiple URLs based on the specified list.

For example;

Line – 8: Disallow: /shop/*.html

Now, it can be different types of URLs like;

- https://akramhossain. com/shop/product1.html

- https://akramhossain. com/shop/product2.html

- https://akramhossain. com/shop/productX.html

But if we want to exclude every URL using a robots.txt file, it will be troublesome.

So, we define that every URL from shop/will be excluded from search engines.

So, we use [*] to call multiple URLs with a single list.

Again, you should understand the theory and mechanism of the robots.txt file, and then you can apply it to your website.

Otherwise, it can be a costly punishment for you.

Is robots.txt required for every website?

No, you don’t need to use this file if you have a simple website that runs based on HTML or a custom-coded simple site with no confidential information to reveal.

Basically, it’s a command for search engines to see where they should crawl or not. But for security reasons, you can use this file to prevent search crawlers from unauthorized crawling.

Final Line

Using the robots.txt file allows you to communicate with search engines to crawl and index certain URLs and not crawl specific URLs.

It helps to save crawling budgets from search engines, and you will get some quality indexing priority from Google.

You have to always think about the importance of every URL, whether it is important or not.

If you think some of your URLs should not be indexed. Then, add this to the disallowed list.

Using the proper robots.txt file, you will get help for faster indexing, and it will make a clear indication to search engines that you are serious about your website’s technical part.

Lastly, before using, don’t forget to test the URL in Google Tester for extra safety.